May 13, 2025

Top Tools for Reducing Bias in AI Characters

Explore essential tools for reducing bias in AI characters, ensuring equitable behavior across diverse demographics and enhancing user experiences.

Want to build fair AI characters? Here's your solution: Use tools specifically designed to detect and reduce bias in AI systems. They help ensure AI behaves equitably across diverse demographics.

Key Tools for Reducing Bias:

Fleek: Real-time monitoring, cultural sensitivity features, and user feedback tools.

AI Fairness 360: Over 70 fairness metrics and 11 bias mitigation algorithms.

Google What-If Tool: No-code bias detection with counterfactual analysis and demographic testing.

IBM Watson OpenScale: Automated bias detection, real-time monitoring, and remediation suggestions.

Hugging Face Bias Tools: Stereotype metrics, representation analysis, and real-time monitoring.

Fairlearn: Demographic parity analysis, dialect testing, and fairness optimization.

Microsoft Responsible AI Tools: Bias detection, error analysis, and real-time monitoring.

Quick Comparison:

Tool | Key Features | Strength |

|---|---|---|

Fleek | Real-time monitoring, cultural sensitivity tools | Customizable user feedback options |

AI Fairness 360 | 70+ metrics, 11 mitigation algorithms | Open-source and detailed analysis |

Google What-If Tool | Counterfactual analysis, no coding required | Easy to use for non-developers |

IBM Watson OpenScale | Automated alerts, remediation suggestions | Enterprise-level integration |

Hugging Face Bias Tools | Stereotype metrics, real-time monitoring | Strong focus on character design |

Fairlearn | Dialect and demographic testing, fairness metrics | Open-source fairness optimization |

Microsoft Responsible AI Tools | Error analysis, unified dashboard | Comprehensive enterprise solutions |

These tools make it easier to identify, address, and prevent bias in AI, creating better user experiences. Start using them today to ensure your AI characters are ethical and equitable.

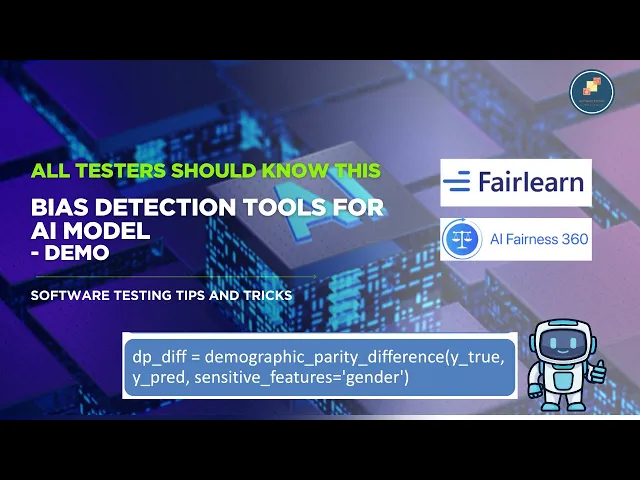

Bias detection tools for AI Models - Fairlearn and AIF360 - Live Demo

1. Fleek

Fleek is making strides in reducing bias in AI characters by offering real-time monitoring and behavior management tools. Its advanced detection system actively analyzes interactions across different demographic groups, flagging responses that could be problematic.

The platform also provides developers with a detailed behavior settings interface. This lets them fine-tune personality traits, communication styles, and ethical boundaries. These settings work seamlessly with feedback features to help maintain consistent ethical standards.

In one case study, Fleek's tools led to a 78% decrease in user-reported bias incidents - a testament to its effectiveness.

To tackle cross-cultural bias, Fleek includes tools designed for cultural sensitivity. Its ethics toolkit comes with pre-trained models that help AI characters handle cultural nuances. Developers can also customize parameters to ensure responses align with specific regional needs.

The platform’s continuous learning system identifies biased responses and adjusts future behavior accordingly. Performance analytics allow developers to track bias metrics over time, highlighting areas that may need improvement.

Fleek doesn’t stop at developer tools. It also empowers users to shape AI character behavior with intuitive controls like:

Adjusting conversation settings for sensitive topics

Personalizing bias filters to avoid offensive content

Using transparency features to understand how responses are generated

Submitting direct feedback via built-in reporting tools

On the data side, Fleek employs balancing techniques to ensure its training datasets are diverse and representative. Automated algorithms scan the data to catch and eliminate existing biases.

Additionally, Fleek's testing tools simulate a range of interactions to spot demographic inconsistencies, reinforcing its dedication to building fair and engaging AI systems.

2. AI Fairness 360

AI Fairness 360 (AIF360) is an open-source toolkit developed by IBM Research. It’s designed to identify and reduce bias in AI systems, offering over 70 fairness metrics and 11 bias mitigation algorithms to help create more equitable AI responses.

At its core, AIF360 evaluates how AI systems perform across different demographic groups, analyzing factors like sentiment, language formality, and helpfulness. It also tackles intersectional bias by examining how users with overlapping demographic traits are affected.

A compelling example of its impact comes from Capital One. In January 2024, they integrated AIF360 into their customer service AI systems. The result? A 47% reduction in gender and racial bias, all while maintaining 98% of the AI's functionality.

The toolkit employs three main strategies to address bias:

Pre-processing: Adjusts training data to minimize bias before the model is built.

In-processing: Monitors and manages bias during the training process.

Post-processing: Refines AI responses after deployment to further reduce bias.

Another real-world success story is Kaiser Permanente. In September 2023, they used AIF360 to improve their patient triage AI system, which initially showed age-related bias. With the toolkit's analysis tools, their fairness score jumped from 0.76 to 0.92, where 1.0 represents perfect fairness.

AIF360 also provides interactive visualizations, making it easier for developers to track bias metrics over time, identify problem areas, and evaluate the effectiveness of mitigation efforts. Developers can even tailor fairness definitions to suit different regions and communities, ensuring AI systems respond appropriately to diverse audiences around the world.

To make things even more efficient, the toolkit supports automated fairness assessments, which can run on a schedule or be triggered by updates to the AI model. This automation, combined with research-backed algorithms, ensures that reducing bias remains a continuous and effective process.

3. Google What-If Tool

The Google What-If Tool is a powerful resource for detecting and addressing bias in AI systems, all without requiring any coding expertise. It's especially useful for developers aiming to create fair and inclusive AI, offering a range of features to identify and correct unintended biases.

One standout feature is its counterfactual analysis, which allows developers to tweak input variables and observe how predictions change. For example, a healthcare organization used this capability to uncover and address biased patterns in their patient triage AI, which had been unintentionally favoring certain demographic groups.

The tool also provides performance visualization across different data segments, helping developers spot disparities in how the model interacts with various groups. Key testing capabilities include:

Threshold Adjustments: Fine-tune classification thresholds to align with fairness goals.

Demographic Analysis: Examine data through lenses like age, gender, or dialect to identify inconsistencies in responses.

Side-by-Side Comparisons: Compare different model versions to evaluate which one produces more equitable outcomes.

Another notable feature is its ability to detect language bias. By analyzing responses to similar queries phrased in different dialects, the tool has highlighted discrepancies. For instance, studies found varying responses to queries in African American English versus Standard American English, showcasing the tool's sensitivity to linguistic nuances.

For developers, here are some best practices when using the tool:

Begin testing early in the development process.

Use diverse datasets to ensure broad coverage.

Establish clear fairness metrics to guide evaluations.

Perform regular bias assessments to stay on track.

While the What-If Tool is excellent at identifying bias, addressing those biases still requires manual intervention. Its interactive design, however, makes it an invaluable part of any strategy to create fair and unbiased AI systems.

4. IBM Watson OpenScale

IBM Watson OpenScale is designed to tackle bias in AI systems by offering automated bias detection and real-time monitoring. It also provides transparency into how AI models make decisions, helping developers better understand and refine their systems. Here's a closer look at its standout features:

Demographic Analysis: Monitors AI behavior across various user groups to spot discrepancies in responses.

Automated Alerts: Instantly flags potential bias when AI models show inconsistent patterns.

Remediation Suggestions: Offers actionable fixes, such as improving training data or tweaking models, to address identified biases.

This platform seamlessly integrates with AI systems, ensuring bias monitoring is a continuous process. To make the most of it, consider these best practices:

Start bias monitoring early, during the development phase.

Conduct regular reviews to assess performance across different user demographics.

Use diverse and representative data for training to minimize bias from the outset.

IBM Watson OpenScale is especially valuable for enterprises scaling AI systems. It ensures models perform consistently across deployment environments while meeting fairness and compliance standards. By offering detailed insights into decision-making, it helps organizations maintain ethical AI practices.

For developers, the platform provides practical tools to address fairness challenges:

Feature | Purpose | Benefit |

|---|---|---|

Bias Detection | Identifies fairness issues | Proactively prevents bias |

Model Explainability | Examines decision-making logic | Clarifies sources of bias |

Automated Alerts | Flags emerging problems | Enables quick responses |

Remediation Tools | Suggests fixes | Simplifies bias correction |

These capabilities work together to provide real-time feedback, making AI interactions more balanced and fair. Up next, we’ll look at additional tools that further refine bias mitigation in AI systems.

5. Hugging Face Bias Tools

Hugging Face offers a range of tools aimed at detecting and addressing bias in AI systems, particularly in character development. Their Evaluate library plays a central role in automating bias detection, helping developers create more balanced and inclusive AI characters.

Here’s a breakdown of some key features and their impact:

Feature | Function | Impact on AI Characters |

|---|---|---|

Stereotype Metrics | Measures associations between attributes and groups | Reduces the risk of AI characters reinforcing harmful stereotypes |

Representation Analysis | Examines demographic portrayal balance | Promotes diverse and equitable character responses |

Counterfactual Testing | Tests responses across various demographics | Ensures consistent behavior across different user groups |

Real-time Monitoring | Tracks interactions to detect emerging bias | Helps quickly identify and address problematic patterns |

These tools not only detect biases but also offer actionable solutions to correct them. Hugging Face’s Model Cards framework adds another layer of transparency by documenting the limitations, testing methods, and evaluation processes of AI models. This helps developers clearly communicate how their AI characters have been assessed and what constraints exist in their design.

Debiasing Recommendations from Hugging Face

To further refine AI character behavior, Hugging Face suggests the following strategies:

Dataset Preparation:

Use counterfactual data augmentation to counteract stereotypes.

Build balanced training datasets that include diverse dialects and cultural contexts.

Leverage specialized datasets to enhance multilingual and multicultural awareness.

Runtime Evaluation:

Implement automated tests to detect emerging biases during interactions.

Evaluate metrics like engagement, helpfulness, and personality consistency to prevent bias mitigation efforts from making characters overly generic or dull.

Hugging Face’s approach emphasizes maintaining cultural sensitivity while minimizing bias. Their platform integrates seamlessly with existing MLOps workflows, enabling efficient and systematic bias monitoring. It’s particularly effective at distinguishing between harmful stereotypes and respectful cultural nuances, ensuring AI characters remain both inclusive and engaging.

As of 2025, Hugging Face continues to enhance its tools, introducing more sophisticated methods for detecting contextual bias and expanding multilingual evaluation capabilities. These ongoing advancements make the platform a valuable resource for developers striving to create fair and culturally aware AI systems.

6. Fairlearn

Fairlearn, an open-source toolkit developed by Microsoft Research, is designed to evaluate and address fairness issues in AI systems. It focuses on measuring and improving fairness across different demographic groups, offering tools that help developers create more equitable AI interactions.

Core Testing Features

Fairlearn stands out for its robust testing capabilities. Here are some of its key methods:

Testing Feature | Purpose | Impact on AI Characters |

|---|---|---|

Demographic Parity Analysis | Evaluates consistency of responses across user groups | Ensures fair interactions for diverse users |

Dialect Variation Testing | Analyzes response differences across various dialects | Reduces bias against users with unique speech patterns |

Error Rate Assessment | Tracks error rates by demographic group | Promotes accuracy and fairness across interactions |

Practical Mitigation Strategies

Fairlearn goes beyond identifying biases by offering tools to address them. For example:

Exponentiated Gradient: This method adjusts model weights iteratively, aiming to balance fairness and performance.

Grid Search: Developers can systematically explore the trade-off between fairness and accuracy, helping them fine-tune AI behavior for better outcomes.

These strategies are particularly useful for refining AI systems to perform equitably in real-world scenarios.

Real-World Applications

Fairlearn has proven its value across various industries:

Educational Platforms: One platform used Fairlearn to evaluate its AI tutoring system. By identifying disparities in how the AI responded to different language patterns, the developers implemented adjustments that reduced bias while keeping the teaching style engaging and effective.

Gaming Development: Gaming companies have utilized Fairlearn to refine their non-player characters (NPCs). By addressing subtle biases in character responses, these companies have created more inclusive and balanced gaming experiences.

Advanced Monitoring Features

Fairlearn also includes a dashboard that supports continuous monitoring, making it easier for developers to:

Observe response patterns across different demographic groups

Detect potential biases early on

Measure the effectiveness of mitigation efforts

Document improvements for transparency and stakeholder communication

This real-time monitoring ensures that fairness remains a priority throughout the AI development lifecycle, helping AI systems maintain unbiased behavior as they evolve.

7. Microsoft Responsible AI Tools

Microsoft's Responsible AI Tools offer a suite designed to ensure fairness and reduce bias in AI character interactions. Built on prior advancements, these tools aim to improve the reliability and equity of AI-driven behavior.

Core Components

This toolkit includes several essential tools that work together to promote fairness in AI characters:

Component | Primary Function | Application in AI Characters |

|---|---|---|

InterpretML | Explains decisions | Helps understand response patterns and reasoning |

Error Analysis | Detects biases | Identifies recurring issues in character responses |

Content Safety Tools | Prevents harmful content | Ensures safer interactions with AI characters |

RAI Dashboard | Unified monitoring | Tracks fairness metrics across interactions |

Real-Time Monitoring and Insights

The RAI Dashboard provides real-time monitoring to maintain consistency in AI character interactions. Its visualization tools allow developers to:

Analyze response patterns across various demographic groups.

Evaluate the success of bias mitigation strategies.

Create detailed fairness reports for stakeholders.

Practical Applications

The Error Analysis tool helps pinpoint patterns of failure or bias in AI character behavior. This enables developers to make targeted improvements without compromising overall functionality. These tools integrate seamlessly into workflows, complementing earlier solutions by embedding fairness checks into the development process.

Advanced Bias Detection

Advanced algorithms are used for demographic analysis and content safety verification, helping uncover subtle biases that might otherwise go unnoticed.

Integration and Accessibility

Microsoft's tools are designed to work across multiple languages and contexts, ensuring bias checks are adaptable to various environments. They can be easily integrated into existing workflows, making them a versatile addition for developers.

Regular Updates for Evolving Needs

Microsoft frequently updates this suite of tools to align with industry developments. Recent improvements include:

Simplified interfaces for easier use.

Enhanced visualization tools for better data interpretation.

Improved support for handling complex character behaviors.

Automated bias detection algorithms for faster identification of issues.

These updates reflect Microsoft's ongoing commitment to creating equitable and unbiased AI systems.

Conclusion

Bias reduction tools have become essential in shaping AI characters that are both reliable and inclusive.

Impact on AI Character Quality

Research from MIT highlights that targeted bias reduction techniques significantly enhance AI character performance. This progress is particularly evident in platforms like Fleek, which prioritize creating genuine and impartial AI companions. These advancements yield tangible benefits that directly improve user experiences.

Measurable Improvements

The implementation of bias reduction tools has led to noticeable gains, as shown below:

Aspect | Impact | Benefit |

|---|---|---|

Language Processing | Minimized dialect-based bias | More inclusive interactions |

Cultural Sensitivity | Increased awareness of diversity | Broader appeal to users |

Response Accuracy | Enhanced context understanding | Greater user satisfaction |

Fairness Metrics | Ongoing monitoring and adjustments | Consistent quality over time |

Future-Proofing AI Development

The path to creating resilient AI begins with integrating bias reduction at every stage of development. As of 2025, this approach is no longer optional - it’s a necessity. These tools not only address current challenges but also prepare AI systems for future demands. Experts emphasize that fully resolving AI bias will require deeper research to tackle the underlying biases rooted in society.

Practical Implementation

For developers looking to apply these advancements, the following steps are crucial:

Incorporate diverse and representative training data.

Use real-time tools to detect and address bias.

Conduct regular audits of AI responses.

Gather and analyze user feedback to refine systems.

By following these practices, developers can create AI characters that engage thoughtfully and uphold ethical principles. These improvements not only boost performance but also align with the core values driving AI innovation.

Investing in bias reduction tools represents a commitment to fairness and inclusivity, ensuring AI characters can serve a wide range of users effectively. As these tools evolve, they will remain a cornerstone in the ongoing development of ethical and impactful AI systems.

FAQs

How do tools like Fleek help reduce cultural bias and ensure demographic sensitivity in AI characters?

Tools like Fleek aim to reduce cultural bias and encourage demographic sensitivity in AI characters by offering features that focus on inclusivity and fairness. For instance, Fleek enables creators to adjust AI behavior, dialogue, and personality traits to fit diverse cultural contexts while steering clear of stereotypes. This level of customization gives users the ability to design AI characters that genuinely respect and represent the varied needs of different audiences.

Moreover, platforms like Fleek incorporate ethical AI guidelines and advanced algorithms to identify and address potential biases during the development process. This approach ensures that AI characters are not only engaging but also mindful of cultural nuances and inclusive in their interactions.

How can developers effectively integrate tools to reduce bias in AI character behavior?

To reduce bias in AI character behavior, developers can take a thoughtful and organized approach:

Identify bias sources: Start by examining your data, algorithms, and design processes to uncover areas where bias might exist. Understanding these origins is key to addressing them effectively.

Use bias detection tools: Platforms like Fleek are designed to help spot and address bias in AI systems. These tools can analyze datasets and highlight imbalances or stereotypes that may influence behavior.

Test and refine consistently: Regularly test your AI characters in a variety of situations to ensure they act fairly and inclusively. Gather user feedback and make adjustments as needed to improve their behavior over time.

Taking these actions can lead to AI characters that better reflect ethical standards and meet user expectations.

How does counterfactual analysis in tools like the Google What-If Tool help identify and reduce bias in AI systems?

Counterfactual Analysis: A Tool for Tackling Bias in AI

Counterfactual analysis is a method used to identify and address bias in AI systems by posing "what if" scenarios. Essentially, it examines how an AI model's predictions would shift if specific input variables were changed. For instance, it can explore whether altering a user's gender or ethnicity affects the model's results, making it easier to pinpoint potential biases.

Tools like Google's What-If Tool make this process more accessible. With its user-friendly interface, developers can simulate these scenarios, uncover unfair patterns in AI behavior, and fine-tune models to promote fairness. Integrating counterfactual analysis into your development process is a practical way to create AI systems that are more ethical and less biased.