AI Agent Hosting, Orchestration, and Processing Made Easy With Fleek

Sophisticated AI agents capable of complex reasoning, task automation, and even autonomous decision-making are a reality today.

The rise of AI agents has introduced a new paradigm in software architecture, one where hosting isn’t just about serving static content, orchestration goes beyond container management, and processing demands stretch far beyond traditional compute workloads.

Understanding these three pillars — hosting, orchestration, and processing — is crucial for anyone looking to deploy AI agents in production.

In this guide, we’ll break down each component, explore the technical challenges they present, and see how Fleek improves AI agent deployment.

Understanding the Trifecta: Hosting, Orchestration, and Processing

- AI Agent Hosting

AI agent hosting goes beyond traditional application hosting. Your infrastructure needs to maintain persistent connections to language models, handle state management differently, and ensure consistent performance for real-time interactions. Unlike conventional web applications, AI agents require specialized runtime environments that can manage model loading, handle prompt engineering, and maintain conversation context.

- Agent Orchestration

While hosting provides the foundation, orchestration is what brings your AI agents to life. Think of orchestration as the conductor of an AI orchestra — it coordinates how multiple agents interact, manages their workflows, and ensures they work in harmony.

This includes:

- Managing agent lifecycles (initialization, scaling, shutdown)

- Coordinating multi-agent conversations and task delegation

- Handling fallbacks and failovers when agents encounter issues

- Balancing workloads across your agent fleet

- Inference Processing

At the heart of every AI agent deployment is inference processing – the actual computational work of generating responses, making decisions, and processing inputs. This component demands careful consideration because it directly impacts:

- Response latency and user experience

- Resource utilization and costs

- Maximum concurrent users your system can handle

What makes these components particularly interesting is how they interact:

- When your hosting layer efficiently manages resources, it enables smoother orchestration

- Smart orchestration reduces unnecessary inference processing by routing requests optimally

- Efficient inference processing allows your hosting layer to handle more concurrent agents

Think of it like a well-oiled machine:

Hosting provides a stable platform, orchestration ensures everything moves in coordination, and processing delivers the actual output.

In the next section, we’ll explore the specific technical challenges developers face while deploying AI agents and their relationship to infrastructure components.

The Hidden Risks in AI Agent Deployments

Here are five key issues with the current state of AI agent deployments:

- Centralization risks

Traditional deployments host their data and route all inference through centralized providers, creating a single point of failure and trust. Users must accept whatever changes providers make to models, pricing, or policies without transparency.

Reliance on centralized providers also can cause vendor lock-in to specific inference or hosting providers.

- Privacy risks

Most AI agent deployments require sending raw user data through third-party servers without clear guarantees about data retention, usage, or protection. This creates significant privacy risks for sensitive use cases. Input data can be logged and stored without consent or training data can be harvested from interactions.

- Censorship

AI agents deployed on centralized platforms are at the risk of facing arbitrary content restrictions and sudden service terminations. Developers have limited recourse when platforms change policies or decide certain agent behaviors are unacceptable.

- Trust-dominant operations

Developers must trust that platforms are executing their agent code as intended, with no way to verify model versions, inference integrity, or data handling practices. AI agents can inherit biases or generate hallucinated responses in centralized systems.

There is no transparency into model decision-making and it is difficult to audit inference patterns.

- Scaling uncertainties

Current platforms provide limited guarantees about how agents will perform under load, leaving developers uncertain about reliability during traffic spikes or concurrent usage scenarios.

Alongside, there is also risk of the AI agent performing inconsistently under load or outside certain geographic boundaries.

The future of AI agent infrastructure must address these fundamental risks while maintaining the performance and scalability developers need.

In the next section, we’ll explore how Fleek’s infrastructure specifically tackles these challenges.

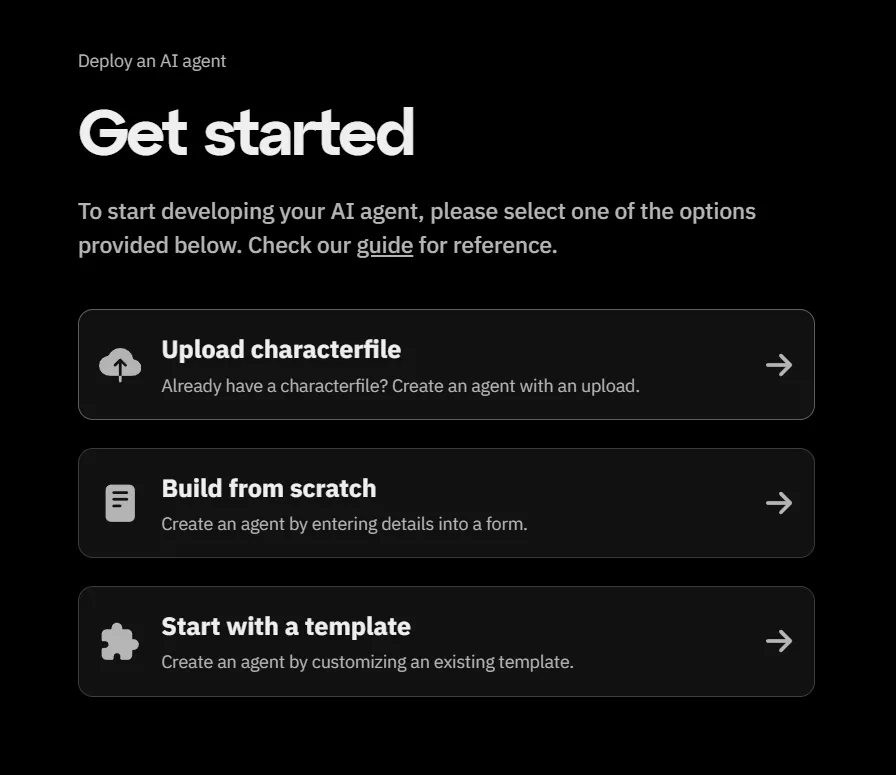

Enter Fleek: One-Click AI Agent Deployment

Fleek reimagines AI agent infrastructure through a unique combination of edge computing, trustless architecture, and developer-first design.

One-click deployment is a one-click solution for creating and deploying an AI agent using ai16z’s Eliza framework.

It leverages Fleek Machines’ infrastructure and exposes it to a larger audience by way of AI agent deployments. It streamlines the process from uploading agent character files to completing deployment in less than 5 minutes.

With this, developers enjoy the following:

- Simplify Deployment process

By uploading the AI agent’s character files, and generating a URL which developers can use to control the agent from their CLI.

It also supports integration with Github, which facilitates continuous integration and deployment (CI/CD) workflows, allowing developers to link their repositories and go live with minimal effort.

- Scalability and Performance

Fleek’s global deployment capabilities ensure that AI agents operate with high performance, utilizing a network of over 100 edge locations to deliver fast loading times worldwide. This infrastructure allows AI agents to scale effortlessly, handling increased workloads without compromising performance.

- Orchestration and Coordination

With the introduction of Fleek Machines, developers can deploy lightweight Trusted Execution Environment (TEE) virtual machines with Docker support, enabling secure and verifiable execution of AI agents. This feature simplifies the orchestration of multiple AI agents, ensuring they work together seamlessly to execute tasks effectively.

- Cost Efficiency

By optimizing resource allocation and automating processes, Fleek helps reduce operational costs associated with AI management. Each AI agent costs as low as $20/m compared to other cloud providers that charge per request or second.

How One-Click Deployment Works?

- Launch your agents in a globally distributed, secure, and privacy-preserving environment.

- Handle increased loads effortlessly, ensuring your agents perform reliably under diverse conditions.

- Control the agents with commands such as

fleek kill bot,fleek refresh botetc through CLI.

Build Functional AI Agents with One-click Deployment

AI agents do not have to face challenges in achieving seamless hosting, orchestration and processing.

With just Fleek’s one-click deployment, watch your AI agents enjoy a simplified deployment process, high scalability and performance, verifiable and secure execution environment, integration without complex setup and affordable cost.

Start today: https://fleek.xyz/agents/

Resources:

- Ai Agents docs: https://fleek.xyz/docs/ai-agents/

- Quick Start Guide: https://fleek.xyz/guides/eliza-guide/

- One-click deployment announcement blog:https://fleek.xyz/blog/announcements/fleek-ai-agent-hosting-eliza-framework/